We contacted Microsoft for comment, and Jeff Jones, Senior Director at Microsoft, gave us the following statement:

“We take matters of offensive content very seriously and continue to enhance our systems to identify and prevent such content from appearing as a suggested search. As soon as we become aware of an issue, we take action to address it.”

Update: Since publication, Microsoft has been working on cleaning up the offensive Bing suggestions that we mentioned. Based on our research, there are still many other offensive suggestions that have not yet been fixed, including a few that we’ve mentioned below. We are unsure if they are simply fixing the offensive items we pointed out, or if they are improving the algorithm.

First, Bing Gets Super Racist

Search for “jews” on Bing Images and Bing suggests you search for “Evil Jew.” The top results also include a meme that appears to celebrate dead Jewish people.

All of this appears even when Bing’s SafeSearch option is enabled, as it is by default. SafeSearch is designed to “help keep adult content out of your search results,” according to Microsoft.

Clicking this suggested search unleashes a torrent of racist, antisemitic content, with more suggested searches like “Jewish People Are Evil,” “The Evil Jewish Race,” and “Why Are Jews so Evil.”

We all know this garbage exists on the web, but Bing shouldn’t be leading people to it with their search suggestions

Type in “muslims are” and Bing will suggest that they are evil or terrorists, or simply suggest that they are stupid.

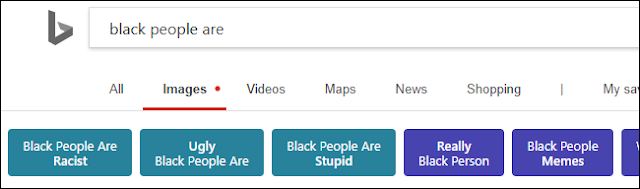

Search for “black people are” and Bing will suggest that black people are ugly, stupid, racist, and even savages.

You can keep going like this, clicking each suggested search and seeing worse and worse recommendations. For example, clicking “Black People Are Stupid” leads to “Black People Are Useless” and “Why Are Black People so Dumb.” Clicking “Black People Are Useless” leads to “Black People Suck” and “I Hate Black People.”

Bing Pushes Conspiracies, Too

Bing’s image search vomits up all kinds of garbage, even when searching for individuals. This problem also extends to Bing’s video search.

Search for “Michelle Obama” on Bing’s video search and two of the top suggested searches are “Michelle Obama Transgender Proof” and “Michelle Obama is a Man.” Click that, and you’ll see conspiracy videos.

Is this the top thing Microsoft wants people to see when they search for Michelle Obama?

Worst of All, Bing Suggests Searching for Images of Underage Children

A reader wrote to tell us that a typo when searching Bing for “grill” gives you really sketchy porn. The problem then becomes much worse, with Bing suggesting you search for images of underage children.

When searching for “gril,” the suggestions at the top of the page recommend you search for some disturbing things, including “Cute Girl Young 16.”

If you click that, it suggests “Cute Girl Young 12”, “Cute Girl Young 10,” and “Little Girl Modelling Provocatively.”

The results are filled with pornography of young-looking models. We hope they’re all 18 years of age or older, but who can say?

Bing leads you down a path from a simple typo to 16-year-old girls to 10-year-old girls, and it’s disgusting.

Other examples are easy to find, too. If you search for “cheese pizza,” which is 4chan slang for child pornography, Bing suggests “Cheese Pizza Girls.”

Microsoft Needs to Moderate Its Platforms

Microsoft needs to moderate Bing better. Microsoft has previously created platforms, unleashed them on the world, and ignored them while they turned bad

We’ve seen this happen over and over. Microsoft once unleashed a chatbot named Tay on Twitter. This chatbot quickly turned into a Nazi and declared “Hitler was right I hate the jews” after it learned from other social media users. Microsoft had to pull it offline.